Evaluation is an essential part of the learning process, as it helps to determine whether students have truly grasped the concepts being taught. The item difficulty index (the difficulty level of items or questions) plays a key role in evaluation and represents the ratio of the number of students who chose the correct response to the total number of students who responded to each question. This index can therefore provide a general measure of the difficulty level of tests. Furthermore, the ability of educators to create items and predict their difficulty indices significantly affects the evaluation process.

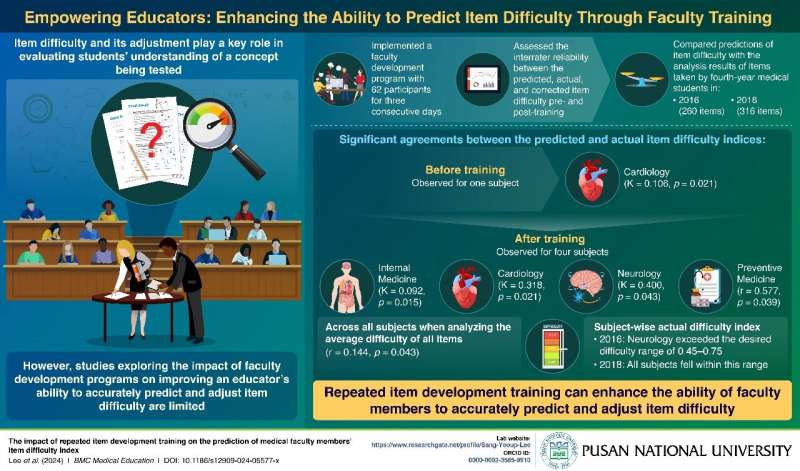

A recurring challenge is that educators may overestimate item difficulty, highlighting the need for improved predictive skills. However, research on faculty development programs aimed at improving the ability of teachers to predict and adjust item difficulty is limited.

To address this gap, a team of researchers at Pusan National University led by Professor Sang Lee, Vice President for Medical Affairs, Professor of Medical Education, and Professor of Family Medicine, conducted a study, the findings of which are published in BMC Medical Education. The study investigated whether repeated item development training for medical school faculty improved their ability to predict and adjust the difficulty level of multiple-choice questions (MCQs).

Explaining the background of their study, Prof. Lee elucidates, “Just like the final stroke completes and perfects a painting, education is perfected through evaluation. Medical school faculty members cannot create high-quality items without proper training. Item development training is essential, and this study demonstrates that the effectiveness of such training increases with repetition.”

Item development workshops were conducted with 62 participants, first in 2016 and later in 2018, and the estimated accuracies of item difficulty predictions were compared. Before the workshop, the teachers developed newly drafted items, which were then reviewed. An item development committee trained the faculty members by offering continuous feedback and helping them revise the newly drafted items according to the national exam standards, with an ideal difficulty range and an application-based focus. Furthermore, the difficulty indices predicted by the participants were compared with fourth-year medical student evaluation analyses.

The study found that before the training, significant agreement between the predicted and actual item difficulty indices was observed for only one subject, cardiology. In contrast, significant agreement was observed for four subjects: cardiology, neurology, internal medicine, and preventative medicine. These findings suggest that systematic and effective training can improve the quality of MCQ assessments in medical education.

Repeated training sessions significantly enhanced faculty members’ ability to predict and adjust item difficulty levels accurately, leading to effective assessments and better educational outcomes. Despite the benefits of the workshop, sustaining these outcomes could be challenging, owing to its three-day duration and hectic schedules of participating faculty members. However, educators receiving item development and modification training will be better equipped to create items and make precise adjustments to difficulty levels, thereby improving assessment practices.

Moreover, these training programs can be applied across all academic fields. In conclusion, this study advocates for continuous faculty development programs to ensure the creation of appropriate items aligned with the purpose of evaluation.

Talking about the potential applications of the study, Prof. Lee states, “Repeated item development training not only helps adjust the difficulty level but also enhances the construction of the items, increases their discriminating power, and properly addresses the issue of validity.

“Soon there will be an era of item development using AI. For that, studies like ours are important for providing necessary information about existing items and students’ answer data, which will help in developing an AI-powered automated item development program.”

More information:

Hye Yoon Lee et al, The impact of repeated item development training on the prediction of medical faculty members’ item difficulty index, BMC Medical Education (2024). DOI: 10.1186/s12909-024-05577-x

Citation:

Faculty workshops improve item difficulty prediction in medical education (2024, July 1)

retrieved 1 July 2024

from https://phys.org/news/2024-07-faculty-workshops-item-difficulty-medical.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.